Introduction

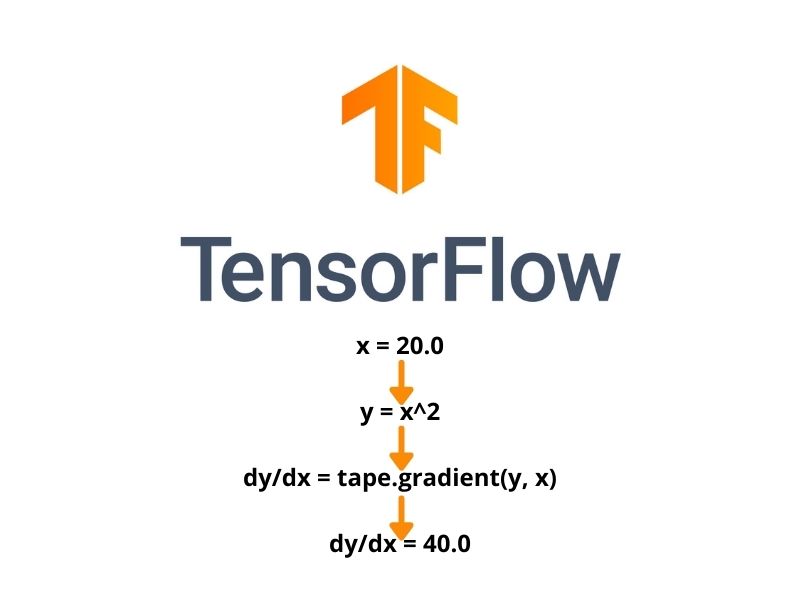

This posts is meant to be an Introduction to tf.GradientTape. Tf.GradientTape is a tool that allows us to compute gradient wrt some variables and track tensorflow computations. Obviously, the possible computations are limited only by our imaginations. They can be as easy as x ^ 3, or to be as difficult as passing the variable (s) to a model.

Here’s an example:

import tenworflow as tf

w = tf.Variable([1.0])

with tf.GradientTape() as tape:

loss = w*w

tape.gradient(loss, w)

# abovce line will return a tensor with value 2 since d(x=1) = 2x = 2As we can wee in the example the variable w is created ad tf.Variable. But if we had created it as tf.constant instead we must instruct the gradientTape to track it, by adding the line “tape.watch(var)“. The results would be:

import tenworflow as tf

w = tf.constant(1.0)

with tf.GradientTape() as tape:

tape.watch(w)

loss = w*w

tape.gradient(loss, w) Higher order computation

Example of second derivative computation using gradientTape :

' higher order gradintTape computation'

x = tf.Variable(1.0)

with tf.GradientTape() as tape_2:

with tf.GradientTape() as tape_1 :

y = x * x * x

dyx = tape_1.gradient(y,x) # 3x ^2

dy2x2 = tape_2.gradient(dyx, x) # 6x

print(dyx)

dy2x2Persistent gradientTape

Normally a gradienTape instace is callable only once. Of course this may be uncomfortable. So, to remedy this we canset “persistent=True” in the GradientTape params. Then the results will be that we are able to call our gradientTape multiple times in our code. Example:

x = tf.constant(3.0)

with tf.GradientTape(persistent = True) as t :

t.watch(x)

y = x* x

z = y * y

dzx = t.gradient(z, x)Without persistent=True this code would throw the following error:

RuntimeError: A non-persistent GradientTape can only be used to compute one set of gradients (or jacobians)Done with the introduction to tf.GradientTape.

Useful links:

- colab notebook with the examples shown above (in update)

- tensorflow original documentation